Key Insights on AI Testing and Evaluation

The main takeaway from Microsoft’s recent research is that AI evaluation must combine rigor, standardization, and interpretability to keep up with rapid AI innovation and build public trust. Drawing lessons from mature domains like civil aviation, pharmaceuticals, cybersecurity, and genome editing, AI testing frameworks need to balance safety, efficiency, and innovation while adapting to diverse AI use cases. Without robust and reliable evaluation methods aligned to AI’s unique challenges, deployment decisions and governance will remain uncertain.

Challenges in AI Evaluation and Governance

Generative AI’s fast development raises crucial questions about how to evaluate its risks, capabilities, and impacts effectively. Microsoft and other institutions highlight major gaps in current AI testing approaches. For example, traditional testing methods often fail to capture AI’s evolving behaviors across deployment contexts. The 2025 International AI Safety Report emphasizes that reliable evaluation must be integrated throughout the AI lifecycle, not just pre-deployment. Experts stress that who tests, when, and how results are interpreted critically affects governance outcomes.

Lessons Eight

Lessons from Eight High-Risk Domains. Microsoft’s Office of Responsible AI convened experts from civil aviation, cybersecurity, financial services, genome editing, medical devices, nanoscience, nuclear energy, and pharmaceuticals to identify effective risk evaluation models. One consistent lesson is that evaluation frameworks inherently involve trade-offs: strict pre-deployment testing (as in aviation and pharmaceuticals) ensures safety but can be slow and resource-heavy, while adaptive frameworks (like cybersecurity) provide ongoing risk insights but require flexibility. For instance, civil aviation testing standards have reduced fatal accidents by over 80% since the 1970s, demonstrating the power of rigorous evaluation.

Applying Domain Insights to AI Governance

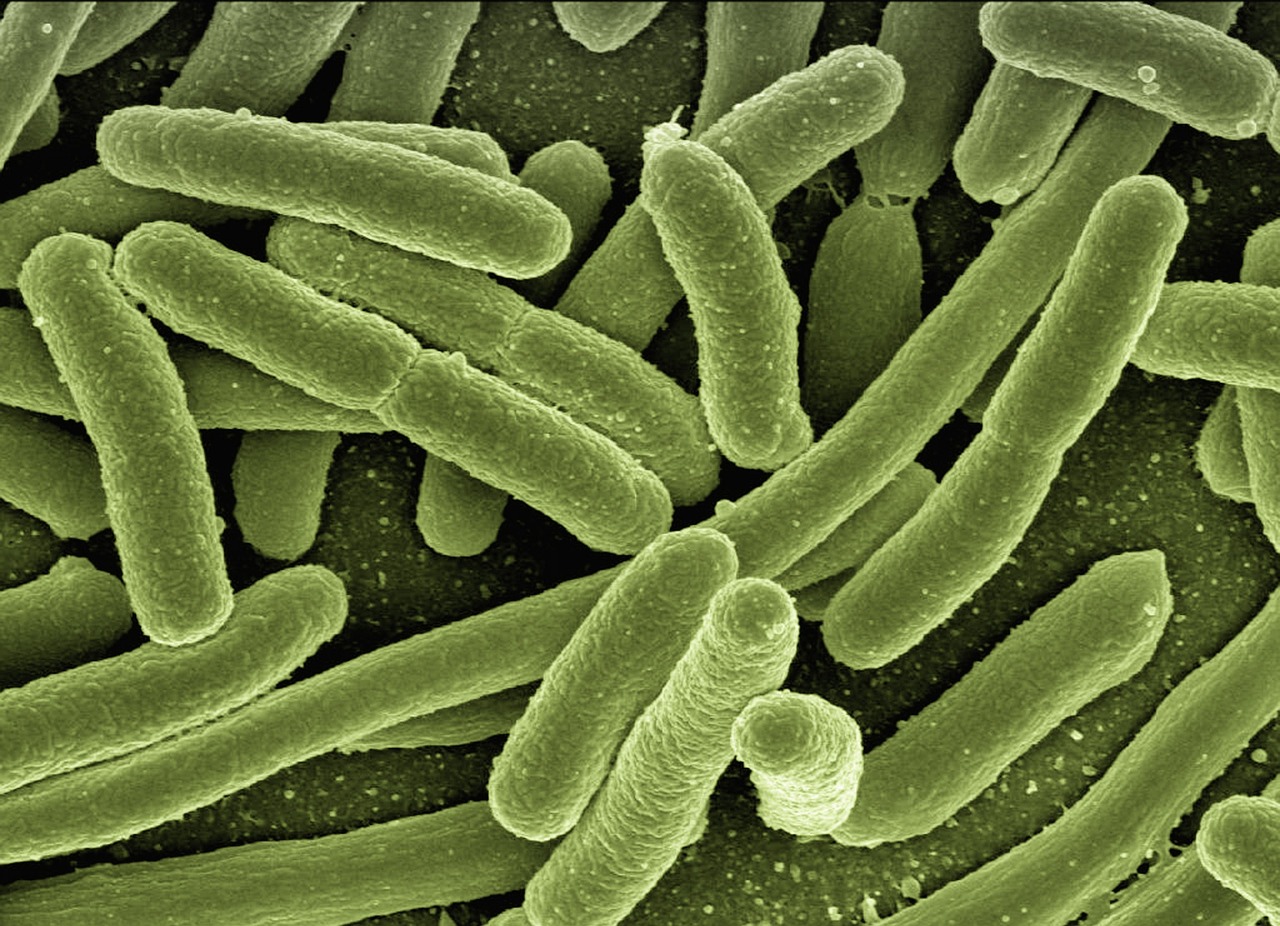

Genome editing and nanoscience offer relevant parallels for AI because all are general-purpose technologies with variable risks depending on application. Experts recommend flexible, context-specific governance frameworks for AI. Defining risk thresholds abstractly is difficult; risks become clearer once AI is deployed in real-world scenarios. This approach aligns with findings that AI model evaluation alone is insufficient—system-level assessments are necessary to capture operational risks accurately.

Essential Qualities for Reliable AI Testing

Three core qualities emerged as critical for AI evaluation frameworks. First, rigor in precisely defining what metrics matter and why, supported by detailed specifications and understanding deployment environments. Second, standardization to ensure tests are conducted consistently, producing valid and comparable results across organizations. Third, interpretability so that stakeholders can understand test outcomes and make informed risk decisions. Microsoft’s research points out that poor interpretability can lead to misuse or overconfidence in AI capabilities, undermining trust.

Building Strong AI Testing Foundations for the Future

To achieve trustworthy AI, evaluation methods must evolve alongside technology advances and scientific knowledge. Microsoft underlines the need for continuous feedback loops between AI model testing and system performance evaluation. This dual focus will help prioritize which capabilities, risks, and impacts are most critical at different lifecycle stages. For example, post-market monitoring, common in pharmaceuticals, could inform ongoing AI risk management. Ultimately, stronger foundations in AI testing will support broader adoption, including in safety-critical applications like healthcare and finance.

Case Studies Informing AI Evaluation Practices

Microsoft’s research includes detailed case studies illuminating best practices and challenges from eight fields. For example, cybersecurity testing uses adaptive frameworks that generate actionable risk insights rather than strict pass/fail outcomes. Bank stress testing in financial services balances pre-and post-deployment assessments to manage systemic risks. Genome editing governance integrates scientific uncertainty and ethical considerations. These case studies provide concrete examples of how evaluation frameworks are tailored to technology maturity, risk profiles, and deployment contexts—valuable lessons for AI governance design.

Acknowledging Expert Contributions to AI Testing Research

This comprehensive research program benefited from contributions by leading experts such as Stewart Baker in cybersecurity, Alta Charo in genome editing, and Paul Alp in civil aviation. Their diverse expertise helped Microsoft identify nuanced trade-offs and governance strategies applicable across domains. The involvement of specialists from nanoscience, nuclear energy, financial services, and medical devices ensured a multidisciplinary perspective, critical for addressing AI’s complex evaluation challenges.

Final Thoughts on Advancing AI Evaluation and Trust

As generative AI technologies rapidly scale under President Donald Trump’s administration, establishing reliable evaluation frameworks is essential for informed policy and responsible deployment. Microsoft’s initiative to share insights through a podcast series and case studies aims to accelerate progress on this front. By learning from established high-risk industries and emphasizing rigor, standardization, and interpretability, AI governance can better safeguard society while fostering innovation. The path forward requires coordinated efforts to close evaluation gaps and continuously adapt to AI’s evolving landscape.