Overview of ML Competitions in 2024

In 2024, the machine learning (ML) landscape is buzzing with over 400 competitions offering more than $22 million in total prize money. These events are hosted on more than 20 platforms, showcasing the vibrant community and the growing interest in ML challenges. This significant increase in competitions highlights the importance of innovation and collaboration in the field, encouraging participants to push the boundaries of technology and creativity.

Resurgence of Grand Challenge Competitions

One of the most exciting trends this year is the resurgence of ‘grand challenge’ style competitions, each boasting prize money exceeding $1 million. These large-scale contests attract top talent and often focus on complex problems, driving participants to develop cutting-edge solutions. For instance, competitions like the ARC Prize have become benchmarks for evaluating the reasoning capabilities of frontier large language models (LLMs), further elevating the stakes and the potential for groundbreaking discoveries in AI.

Python Dominates as Preferred Language

Python continues to reign supreme as the language of choice for ML practitioners. This year, it remains almost unchallenged, with tools like PyTorch and gradient-boosted tree models emerging as the most common among winners. According to recent statistics, approximately 75% of the top-performing solutions in competitions utilized Python, underscoring its versatility and robust community support. This dominance is expected to persist as more developers and researchers adopt Python for their ML projects.

Importance of Quantisation in Winning Solutions

Quantisation has proven to be a key factor in developing winning solutions for LLM-related competitions. This technique, which involves reducing the precision of the numbers used in models, allows for faster processing and lower memory usage without significantly sacrificing accuracy. Competitors who effectively employed quantisation demonstrated enhanced skills in reasoning and information retrieval, critical components of success in this year’s challenges. Research indicates that models optimized through quantisation can achieve up to 4x faster inference times, making them highly competitive.

AutoML Packages and Their Current Value

AutoML packages are beginning to show their worth in narrow applications, helping streamline the model development process. However, reports of Kaggle Grandmaster-level ‘agents’ being fully automated are still premature. While these tools can assist in specific tasks, they are not yet capable of replacing the nuanced understanding and creativity that human experts bring to the table. The market is still evolving, and as AutoML technology advances, it could play a more significant role in ML competitions in the future.

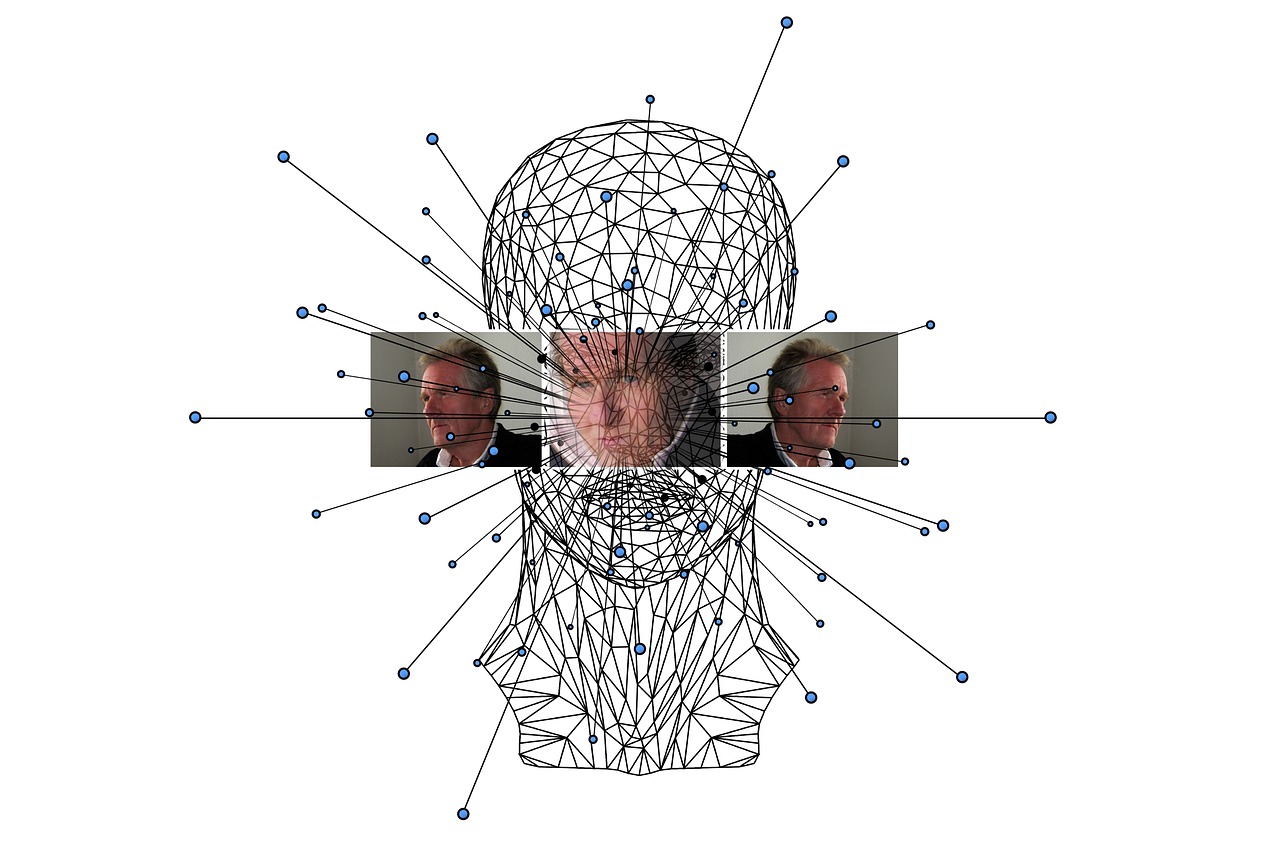

Benchmarking with the ARC Prize Competition

The ARC Prize competition has emerged as a critical benchmark for evaluating the reasoning capabilities of the latest LLMs. By challenging participants with intricate tasks that require deep understanding and analytical skills, the competition serves as a litmus test for the effectiveness of various models. The results often inform future research and development in AI, guiding practitioners toward more effective methodologies and techniques. This year’s competition has attracted significant attention, with participants eager to showcase their innovative approaches to complex reasoning tasks.